Equality watchdog addresses discrimination in use of artificial intelligence

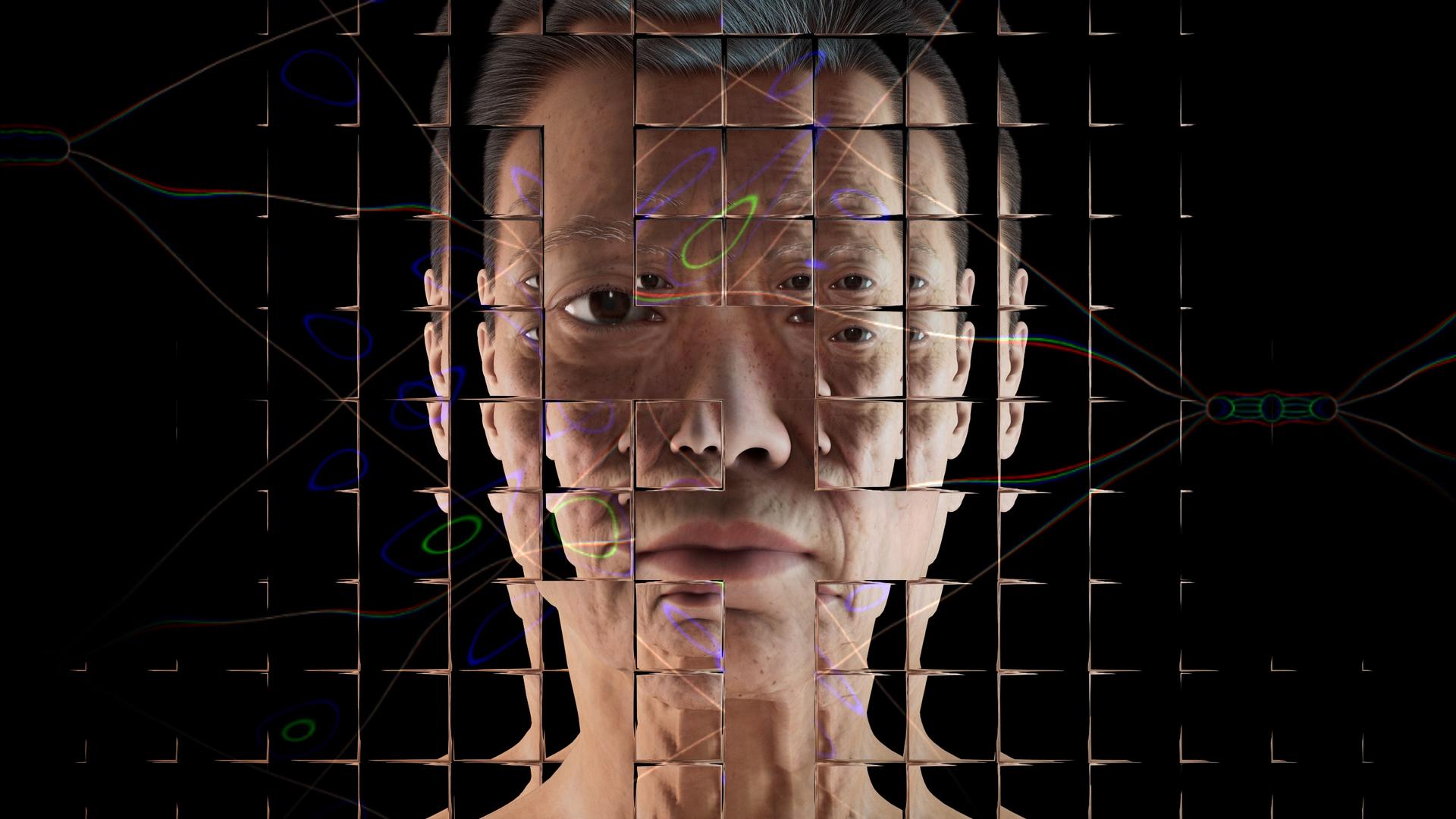

The use of artificial intelligence by public bodies is to be monitored by Britain’s equality regulator, the EHRC, to ensure technologies are not discriminating against people.

There is emerging evidence that bias built into algorithms can lead to less favourable treatment of people with protected characteristics such as race and sex.

The Equality and Human Rights Commission (EHRC) has made tackling discrimination in AI a major strand of its new three-year strategy and is publishing new guidance to help organisations avoid breaches of equality law, including the public sector equality duty (PSED). The guidance gives practical examples of how AI systems may be causing discriminatory outcomes.

From October, the Commission will work with a cross-section of around 30 local authorities to understand how they are using AI to deliver essential services, such as benefits payments, amid concerns that automated systems are inappropriately flagging certain families as a fraud risk. The EHRC is also exploring how best to use its powers to examine how organisations are using facial recognition technology, following concerns that the software may be disproportionately affecting people from ethnic minorities.

These interventions will improve how organisations use AI and encourage public bodies to take action to address any negative equality and human rights impacts.

Marcial Boo, chief executive of the EHRC, said:

“While technology is often a force for good, there is evidence that some innovation, such as the use of artificial intelligence, can perpetuate bias and discrimination if poorly implemented. Many organisations may not know they could be breaking equality law, and people may not know how AI is used to make decisions about them. It’s vital for organisations to understand these potential biases and to address any equality and human rights impacts. As part of this, we are monitoring how public bodies use technology to make sure they are meeting their legal responsibilities, in line with our guidance published today. The EHRC is committed to working with partners across sectors to make sure technology benefits everyone, regardless of their background.”

The monitoring projects will last several months and will report initial findings early next year.

Says David Sharp, CEO of International Workplace:

“The ethics of artificial intelligence, and its impact on society, are rightly attracting a lot of attention. The pace of AI-driven technologies is advancing rapidly, with developments in the performance of large language models and image generation tools such as DALL-E 2 being made every month.

“This new guidance from the EHRC is welcome and provides useful information to help public sector organisations address some of the risks around bias, especially where the Public Sector Equality Duty applies. The devil is in the detail however, and non-specialists are likely to face the same problems that many in the technology sector face themselves, namely opaque ‘black box’ algorithms that are hard to explain, where it’s hard to understand the implications of their use.

“Assessing the impact of a proposed or existing AI in potentially causing discrimination is a worthwhile activity but is fraught with difficulty, not least because it’s hard to know what you don’t know. Perhaps the most obvious example of this is the use of AI in automating the recruitment process where – on the face of it – the use of technology might be considered to make better, more objective decisions than humans. But Amazon’s AI recruiting tool ended up shortlisting more men than women because the algorithm was looking to recruit similar people to those who already worked there (ergo, men). Companies such as HireVue have come in for criticism for ‘unfair and deceptive’ practices in their use of facial recognition software. And a fascinating study by Bavarian Broadcasting using professional actors found that the AI rated one candidate as less conscientious when video interviewing wearing glasses, and another as more open and less neurotic when appearing with a bookshelf in the background.

“Concerns about the use of AI also go beyond the bounds of algorithmic bias to address wider issues about surveillance and data privacy, and the huge demand such technologies place on the planet’s precious resources, described in detail in Kate Crawford’s Atlas of AI: Power, Politics and the Planetary Costs of Artificial Intelligence.”

The Artificial intelligence in public services guidance advises organisations to consider how the PSED applies to automated processes, to be transparent about how the technology is used and to keep systems under constant review.

In the private sector, the EHRC is currently supporting a taxi driver in a race discrimination claim regarding Uber’s use of facial recognition technology for identification purposes.

Image by Alan Warburton / © BBC / Better Images of AI / Virtual Human / CC-BY 4.0